The Problem

When I develop tools for internal use, my users are process engineers. Tech savvy people, but not coders. The problem domain is of a technical nature which should be the perfect place to create tooling for automating the project production. We spend thousands of hours every year, as do every competitor, on checking numbers, transposing tables and datasets using ordinary office software. We are delivering at quality and cost, but still inefficient.

We’ve all experienced the users with a “vision”. We’ve had it ourselves. The big framework in the sky. The game changing application that does everything and knows everything. Attempts at making this application become bloated, costly, maintenance heavy and often fail to materialize.

Consider the typical setup of such Engineers. There is a big project about to be delivered. The project can be broken down into sub systems. And then the principal engineer distributes the work load between the available resources:

All engineers do basically the same work, but on different parts of the system. The complexity of the end product decides the required skill level of the engineer. A complex subsystem will be handled by a senior engineer.

In reality, this is a false premise. The complexity of the end product is irrelevant. What matters is the complexity of the work to produce it.

When re-examining the work flow in order to automate it, it is more interesting to let the workflow be influenced by the emergent properties of the new problem domain, which is the project execution, not the end product.

When working in a tight loop with this person we compose sovereign functions that various (disconnected) parts of his workload.

Basically, I have created one empowered user. One user who can field test the functions. Note that I haven’t created any application yet, only functions. There is an execution engine. But at this point the functions are tailor made to the individual user.

If you were to cram all these functions in the same app, you would incur a significant maintenance debt. The functions behave differently and change for different reasons. The volatility of the end app is the sum of all volatility in its parts. Immature functions will immediately clutter the design, both architecturally and the GUI.

When field testing the functions, it is common to group these functions chronologically or by subsystem. However, there is another, often overlooked, aspect to consider. Namely, the complexity of applying/executing the function to/against the workload.

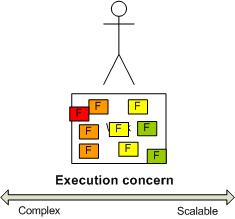

When field testing the functions, new classifications emerge.

- Some functions (green) can be run straight out-of-the-box. A button would do.

- Some functions (yellow) demand tweaking in a few places. They can be executed, but with options.

- Some functions(orange), however, demand unpredictable tweaking and its parameterization is hard to identify.

- And finally you have the functions (red) that seemingly demand the same complexity as the computer code itself.

To cram all such responsibilities into a single application, is IMO a heavy contributor to application bloating, code rot and the ol’ saying “software is never done, it is abandoned”. The problem is that application developers will fail to properly absorb user requirements in the red and orange category. In an organization where the developer should not be assumed to know the complexity of the problem domain, this is very costly indeed. Especially if your toolchain has an expensive and slow release process.

The solution

You examine options for implementing a GUI for the orange/red category. Instead, you let the principal engineers earn their pay.

Also, as we keep running the functions, we learn more about them, and we are able to “push” them towards the "green” category. And, as we are discovering new aspects of our work flow, functions can be spawned in the yellow/orange category and through field testing end up in the green/yellow category.

So, NOW, we create GUI.

Yes, I’m serious. The principal engineer is actually handling computer code (C#) in production. This model has some additional key benefits that may not be obvious.

- The whole workforce is now a living design process.

- As they mature, functions gravitate towards the green.

- App1 is very simple with functions and work flow that is well tested before its first version was released. It handles the bulk of the work. Changes are easy, safe and inexpensive.

- Apps of type “App2” may be more complex, but its complexity is warranted and it is towards a more focused problem domain as well as a dedicated audience. Typically specialized roles/contexts. Stuff you don’t want to clutter up the common case with.

- Functions of a complex nature, one-shots or that people have trouble defining can be put into production in orange and red category, and let it mature for a while.

- There is a natural progression and common ground between the developer and the principal engineers.

- The process is no longer constrained by the GUI and is never blocked. Whenever the App1 does not cover something because of some rare case, chances are that the principal engineer can run the underlying function. We do not need to put a GUI harness around every single conceivable usage.

And, it friggin’ works.